Earlier this year I began my ECT inquiry which set out to compare two different pedagogical approaches to teaching programming. The introduction to the inquiry is here. In this post I take a look at the results.

Informal observations

The first thing I noticed when using TuringLab with Year 8 classes is that students really enjoy using it with one student commenting "I'm in love with this website. Why didn't you show us this sooner Sir?". Using it engaged one of my tricky classes in the subject. However, on closer inspection of what they were actually doing whilst on the site, I became concerned that this might be at the expense of them actually building a strong understanding of the theoretical knowledge they need to become confident programmers. One reason for this was their interactions with the points system which meant that students would often skip the theory input and then just guess the knowledge check questions as it was a faster route to gaining points than actually engaging with the information to understand what the correct answer was. I think to a large extent the increase in engagement from students who are normally less interested in the subject simply came from their desire to get more points than their peers. A good example of how "engagement" or students producing some sort of work is a poor proxy for learning.

Another concern I had when observing students use of TuringLab is that it doesn't seem to be great at teaching about errors (syntax or otherwise) and how to resolve them. When some of the "training wheels" that TuringLab has were released and students tried to do some modifications themselves they were almost never able to read error messages independently. I did have a much higher immediate success rate in classes I was live modelling the thinking steps to before they tried programming themselves. Although I was concerned about the what the extent to which these students were obtaining success just by copying me rather than developing deeper understanding and fluency. That is a problem that is much more easily solved by an individual teacher by modifying the pedagogical techniques they employ in any particular lesson with their students.

TuringLab utilises a heavily scenario based approach to presenting the curriculum content to students. Whilst this can be of benefit in terms of maintaining interest, my concern here was whether students can than apply their knowledge from TuringLab exercises to new programming tasks in different contexts. From subsequent lessons I've taught after the TuringLab experiment I know this has been challenging for the students in that group. I feel they've grasped aspects of how Python programs work syntactically with TuringLab, but their mental model of the entire process is not complete enough to develop even the simplest programs themselves. This is in contrast to the "Mode A" group, who whilst not leaps and bounds ahead, are able to, in many cases, put together some simple programs although as we will see in the next section their theoretical understanding doesn't necessarily match their practical ability. In both cases though it is worth remembering that the quizzing was done after a relatively small number of lessons and students only study computing for 1 hour per week at this age, therefore it is not surprising that they have not developed a high level of fluency at this point.

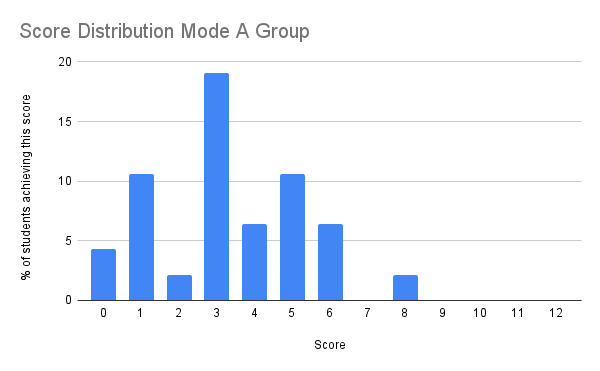

Formal quiz results

The formal quiz aimed to test theoretical understanding underpinning the programming concepts students were using when developing their programs primarily rather than the practical skill.

Number of tested participants:

- TuringLab n = 47

- Mode A* n = 29

Average scores of both groups:

- TuringLab = 3.234042553

- Mode A = 3.344827586

Validity

Anyone who read my previous post may observe that the number of participants is lower than quoted there. In the Mode A group there is a significant decrease due to not having time to collect data with one of the groups. This will have had a significant impact on the validity of the Mode A group results as data has only been collected and analysed from one half of the cohort. Additionally there is a slight decrease in both groups due to students being absent when the data was collected. The difference in both participant groups must be kept in mind when interpreting the results above.

Data analysis

Concluding thoughts

* I believe I called it "Teacher-led" in the other post but I mean the same thing.